Over the past five years, the use of large language models (LLMs) and advanced machine translation (MT) has accelerated dramatically in the translation and localization industry.

Despite this surge, LLMs – by definition – present key challenges to the translation industry, and this is why, ultimately, we believe they’ll be passed over in favour of another AI model that more closely fits the bill: the Small Language Model.

Early on, neural machine translation (NMT) systems (the predecessors to today’s LLMs) were cautiously adopted. In 2019 only ~13% of translation projects for end clients used MT.

However, rapid improvements in quality and efficiency drove wider uptake. By 2020, roughly 24% of projects involved MT – nearly double the previous year.

The advent of true large language models around 2022 gave an additional boost to the automation of translation workflows.

Today, integration of LLMs into translation workflows is virtually standard practice at all tech-forward organizations.

Nimdzi analysts note that “the new wave of AI tools, such as large language models (LLMs) [and] LLM-enhanced machine translation, ... are quickly finding their way into translation workflows” as features inside translation management systems, content platforms, subtitling tools and more.

In short, what was a niche, experimental technology just over five years ago has become a core component of translation and localization operations today.

Generalists can never outperform specialists

Large Language Models are hugely powerful, flexible, and can execute on general tasks extremely well. However, their capacity to perform well in general tasks and the breadth of training data that informs this capability is the very reason for their predicted demise when it comes to translating content.

General purpose LLMs generate human-like text across many topics but often miss subtle, domain-specific nuances for industry categories.

For example, when translating a financial report, a generic model might misinterpret the term “equity.” Generally, equity is used most commonly in a real estate context to describe the difference between the market value and the outstanding mortgage of a property. However, in accounting, equity refers to ownership value in a company or assets.

Without understanding these distinctions, the LLM can produce inaccurate translations or interpretations that jeopardize the accuracy of critical communications.

Because these broad models require significant computing power, the cost of running them is high and delivery can be slow. Their lack of specialization also means more human editing is needed, reducing efficiency and increasing turnaround times.

On large projects, these factors translate into higher costs and slower results—directly impacting the quality and reliability your business depends on.

“Good things come in small packages”, or so the saying goes. Our experience at Straker was discovering the true power of custom small language models.

Unlike general-purpose LLMs – which are a jack-of-all trades – we’ve found success in building and training SLMs, targeted for specific industries or tasks.

By focusing exclusively on localization, our models have achieved unmatched accuracy and nuanced understanding, mastering industry jargon, technical terms, and linguistic complexities where generic models falter.

Straker built Tiri as a family of small, specialized AI models designed specifically for localization. Rather than one generalist model, Tiri operates like a team of expert translators—each trained to handle particular languages and industries with precision.

Tiri models are trained on high quality specific data, such as translation memories / history, enabling them to understand financial, legal, or technical terminology with exceptional accuracy.

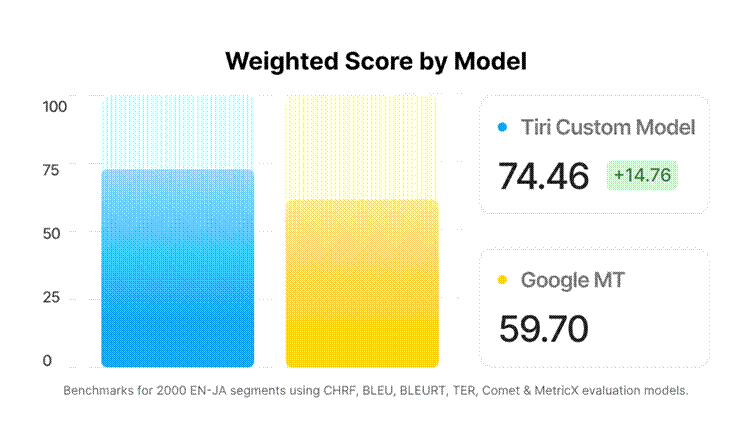

For example, the performance of our specialized AI models – like Tiri J for Japanese to English investor relations content – demonstrate how customizing language AI to specific industries and client needs dramatically improves translation quality. These models deliver contextually accurate and industry-relevant results far beyond what general commercial solutions provide.

Because Tiri models are smaller and more focused, they require fewer computing resources, enabling faster processing speeds and easier integration into existing workflows without massive infrastructure costs. The result is lower costs and quicker turnaround times, helping your business scale efficiently while maintaining high-quality output.

Tiri is built to learn and improve through human feedback integrated directly into Straker’s product suite. This Reinforcement Learning from Human Feedback (RLHF) approach ensures that translations become more accurate and aligned with client needs over time.

Straker knows the future of AI powered language services lies in specialization. Custom Small Language Models, like Tiri, are revolutionizing how companies localize content—delivering superior accuracy, speed, and efficiency by focusing on what matters most: understanding language in context.

● Higher accuracy – Industry-ready terminology and domain-specific context out of the box.

● Lower costs – Smaller models require fewer compute cycles.

● Faster turnaround – Translate, score, and publish in hours, not weeks.

While general-purpose LLMs started the revolution in the translation industry, we believe Small Language Models will finish it—delivering hyper-focused, industry contextual translations at speed and scale, without the cost and compute power of LLMs.

If your business needs precision, and scalability in localization, Tiri is the AI solution designed to cultivate your success in an increasingly global marketplace.

Business Leaders: Learn more about Tiri here https://www.straker.ai/ai-platform/tiri

Engineers: Explore our technology principles on https://labs.straker.ai